.

Elon Musk is shaking up both the car and space industries with his respective Tesla and SpaceX companies, and the irony is that while the former is totally upending the combustion engine for land transportation with zippy and stylish electric cars, the latter company has to not only embrace but to create new combustion technologies to get a manned mission to Mars as Musk not only dreams of doing, but is making happen.

Getting a small group of human beings to Mars and back is no easy task, we learned at the recent GPU Technology Conference in San Jose hosted graphics chip and accelerator maker Nvidia. One of the problems with such a mission is that you need a very large and efficient rocket engine to get the amount of material into orbit for the mission, explained Adam Lichtl, who is director of research at SpaceX and who with a team of a few dozen programmers is try to crack the particularly difficult task of better simulating the combustion inside of a rocket engine. You need a large engine to shorten the trip to Mars, too.

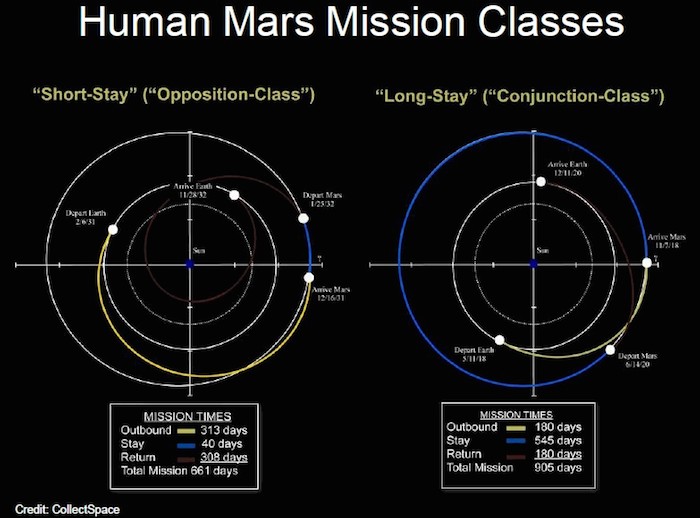

“One of the major problems is radiation exposure,” explained Lichtl. “To get to Mars, you need to align your launch with the correct window to an orbit given the amount of energy that your rocket can produce. Anyone who has played Kerbil Space Program has seen this is delta-v, which is the change in one orbit – say one around the Earth – and escaping that by giving yourself a delta-v and a different trajectory that will intersect with Mars. We need vehicles that can provide as much delta-v as possible in order to shorten that trip to Mars. In addition, there is a second major obstacle of actually staying on Mars – not just taking a robot or a rover or going on a suicide trip, but a real expedition to Mars – and that is infrastructure. When the pioneers went across the country to settle, they had to build their own cabins and they had to hunt for food. But they had air. It is a little bit more tricky on Mars.”

.

Not only do you need a lot of stuff to get to Mars and sustain a colony there, but you also need a way to generate fuel on Mars to come back to Earth. All of these factors affect the design of the rocket engine.

The International Space Station weighs about 450 tons, and it took 36 Space Shuttle missions and five Proton rocket launches to heft its parts into space. The NASA Mars Design Reference Architecture wants to get about 300 tons of material into Earth orbit and assemble a vehicle for the Mars trip – and do it in three launches of 100 tons each. Building this vehicle and giving it fuel from Earth is relatively easy, but the rocket on the spacecraft has to be able to burn methane as a fuel, because this can be synthesized from water in the Martian soil and carbon dioxide in the Martian air relatively easily. (The current Merlin 1D engines used by SpaceX in its launch vehicles run on kerosene, which is a much denser and thrust-producing fuel, which is made through the distillation of oil.)

As if these were not problems enough, there is another really big issue. The computational fluid dynamics, or CFD, software that is used to simulate the movement of fluids and gases and their ignition inside of all kinds of engines is particularly bad at assisting in rocket engine design.

“Methane is a fairly simple hydrocarbon that is perfectly good as a fuel,” Lichtl said. “The challenge here is to design an engine that works efficiently with such a compound. But rocket engine CFD is hard. Really hard.”

And so, SpaceX is working with various academic research institutions and Sandia National Laboratories to come up with its very own CFD software, which will be used to create future – and beefier – versions of the company’s Merlin rocket engines suitable for the trip to Mars and able to burn methane as a fuel.

Lichtl is an interesting choice to head up the design of the rocket engines at SpaceX, which seems like a dream job if you ask me. While a graduate student at Carnegie Mellon University, Lichtl developed Monte Carlo and high-energy physics simulations, and then he worked at Brookhaven National Laboratory as a post-doc doing high-energy physics research. This was followed up by a stint of several years at Morgan Stanley as a quant and then as a strategist for the oil and gas and then base and precious metals desks at the financial services firm. Lichtl joined SpaceX as a principal propulsion engineer two years ago and has risen through the ranks to head up the Mars engine design effort.

Stephen Jones, who is the lead software engineer at SpaceX and a former engineer at Nvidia, is running the project to develop the company’s homegrown CFD software. This software has not yet been given a name, and the techniques that SpaceX has developed could ironically be used to improve all kinds of combustion engines – including those used in cars. (Elon Musk might not like that.)

Existing CFD Not Well Suited To Rockets

At its engine plant in Texas, SpaceX is trying out a number of different injectors and other parameters to squeeze the most performance out of its engines, and it runs tests every day. These tests are expensive and, more importantly, even if you design engines and do physical testing on them and layer them with all manner of sensors on the outside, you cannot see what is going on inside the engines as they run. It is far better to simulate all of the components of the engine and their fuels and narrow down the injector configurations through simulations and then do the design, manufacturing, and physical testing on just a few, optimal configurations.

“Another very important insight that can be gained with rocket engines is that of combustion instability, which is the coupling of pressure waves and chemical energy release. This is a phenomenon that has delayed many engine projects for many years. It is the bane of engine development. The engine starts to shake and either it shakes so violently it comes apart or you can’t put a payload on top of the vehicle because it will shake it too much.”

Automobile engine and turbine engine manufacturers have used CFD to radically improve the efficiency of these engines. But the timescales are much more confined and so are the areas where the combustion reaction is taking place. These engines have far less complex chemical reactions and physical processes to simulate than what is going on in a much larger rocket engine. So you can’t just take CFD software that was designed for an internal combustion engine like the one that Tesla is trying to remove from the roads over the next several decades and use it to simulate a rocket engine.

At the molecular scale in the fuel and oxidizers, reactions inside of a rocket engine take place at between 10-11 and 10-9 seconds, and flow fields (or advection) occur at between 10-7 and 10-6 seconds, and the acoustical vibrations and the chamber residence (when the fuel is actually burning in and being ejected by the rocket motor) occur at between 10-4 and 10-3 seconds. This is a very broad timescale to have to cover in a CFD simulation.

“The difference is that without GPU acceleration, and without the architecture and the techniques that we just described, it takes months on thousands of cores to run even the simplest of simulations.”

The physical size of the reactions that need to be simulated is also a problem that other combustion CFD programs do not have to cope with. At the one extreme, the combustion chamber of a rocket motor is around 1 meter or so long and at the other end the scale is what is called the Kolmogorov scale, at one micrometer, that controls the rate of viscous dissipation in a turbulent fluid and therefore determines the rate of combustion in the rocket engine. (Basically, the exploded fuel creates ever smaller eddies of chemically reacting fuel and oxidizer, starting with the injection at a large scale and ending with combustion at the Kolmogorov scale, where the extreme friction between these components creates the heat that ejects the material from the rocket, producing thrust.) That is a factor of 1 million scale that the simulation has to cope with on the physical level.

“If you think about subdividing any sort of CFD mesh by powers of two, over and over again, you need to subdivide it by about 20 times in order to span that kind of dynamic range in length scales,” says Lichtl. “If you were to uniformly populate a grid the size of a combustion chamber, we are talking about yottabytes of data. This is not feasible.”

What rocket designers do is what others who do simulation and modeling typically do, and that is either to have a coarse grained simulation with lots of features but pretty low resolution or a much smaller simulation, in terms of scales of time and space, but with a richer sense of what is going on.

“Why not have it both ways?,” Lichtl asks rhetotically. “The interesting thing about turbulence is that even though there is structure at all scales, it is not dense. You do not have to resolve down to the finest scales everywhere. It is really fractal in nature. This lower or fractal dimensionality allows us to concentrate computing resources where it is needed. You can think of this as a glorified compression algorithm.”

The trick is to do all of the mathematics on the compressed fractal data that describes the turbulent fluids without having to decompress that data, and to accomplish this, software engineers at SpaceX have come up with a technique called wavelets local fractal compression. This is done by using an adaptive grid for the simulation, splitting the difference between a structured grid at a certain scale and an unstructured grid that offers a non-uniform chopping of simulation space that is related to the scale of the features around it. Both of these are static and have to be configured before the simulation beginds, but what SpaceX has come up with is both dynamic and automatic.

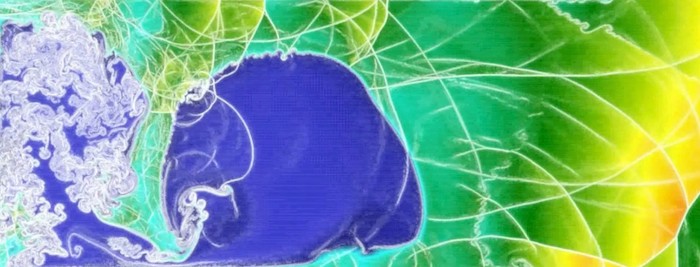

To show off its CFD code, Jones showed a simulation of some fuel and oxidizer being exploded in a box, with shock waves bouncing the fluid around and causing a tremendous amount of fractal turbulence. (This simulation is so much better than a lava lamp. You can see it at this link starting at about 36 minutes into the presentation.) This simulation shows the density of the gases changing over a span of time covering microseconds:

.

“If you actually did this as a fully dense calculation, this would require 300 million grid nodes,” said Jones. “We did this on a single GPU because we are only calculating it where it needs to be done and it figures it out for itself where that data needs to be.”

Jones did not divulge which GPU that SpaceX was using, but presumably it was a “Kepler” class Tesla GPU coprocessor and presumably it is making use of the dynamic parallelism features of that GPU to do this adaptive grid. (This is one of the key differentiators between the GPUs that GeForce graphics cards get and the Tesla server coprocessors that have this and other features and that command a premium price because of that.)

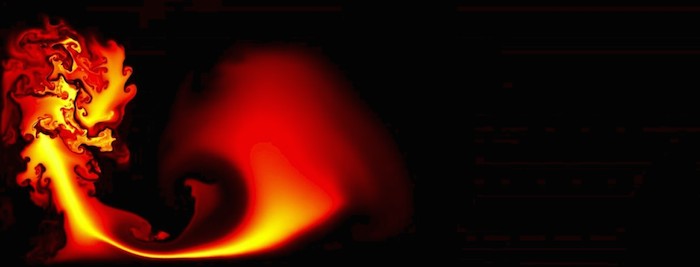

Here is the same simulation running on a single GPU that shows the ignition of the gas and the distribution of temperatures (starting at 37:45) as it explodes and the gas ricochets around:

.

The yellow areas are the hottest spots in the explosion, and Jones said you can zoom in by a factor of 60,000X in this simulation and still see structures.

Lichtl says that people have tried to use wavelet compression before, and these particular simulations are based on work done by Jonathan Regele, a professor at the department of aerospace engineering at Iowa State University.

“The difference is that without GPU acceleration, and without the architecture and the techniques that we just described, it takes months on thousands of cores to run even the simplest of simulations. It is a very interesting approach but it doesn’t have industrial application without the hardware and the correct algorithms behind it. What the GPUs are doing here is enabling tremendous acceleration. I am grateful to SpaceX for allowing us to basically start from scratch on CFD and in many ways reinventing the wheel. People may say, ‘Why would you do that?’ The reason is that if you are a little bit wrong in a traditional CFD simulation, you are typically OK, you are close enough to the answer to make an engineering decision. With a combustion simulation, if you get it wrong, you have to deal with the vicious interplay of all of these different physical processes.”

To be more precise, if you get the temperature wrong in the simulation by a little, you get the kinetic energy of the gas wrong by a lot because there is an exponential relationship there. If you get the pressure or viscosity of the fluid wrong by a little bit, you will see different effects in the nozzle than will happen in the real motor.

The other neat thing about its GPU-accelerated combustion CFD, says Lichtl, is that the software and hardware is really good at handling the chemical kinetic models that describe the backwards and forwards chemical reactions as fuel and oxidizer come together. Burning of hydrogen and oxygen is not a simple reaction that creates two water molecules from two hydrogen molecules and one oxygen molecule; rather, you have to simulate 23 possible reactions and 11 intermediate species of molecules to cover all the possible permutations. With the burning of methane with oxygen, there are 53 species of possible intermediate molecular species and 325 possible reactions.

“This is not to be taken lightly,” said Lichtl. “And having the ability to have a massively parallel system crunching this is invaluable. If you can reduce the number of grid points you have to keep active, you can use that otherwise wasted resource on something more valuable, like a chemical reaction calculation.”

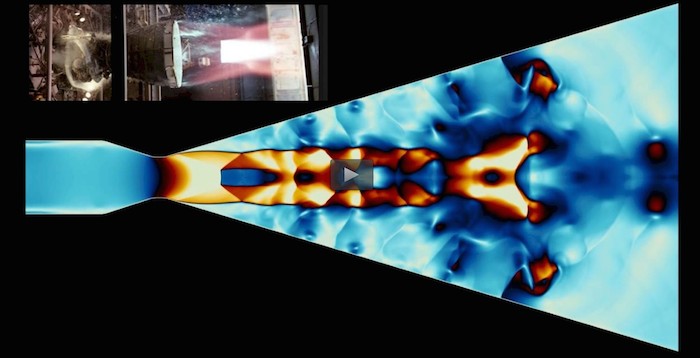

This is precisely what SpaceX is doing with its own CFD code, which simulates the chemical reactions and turbulence of fuel being burned in a compressor chamber and ejects from a nozzle (at 43:00 in the presentation):

.

This particular simulation is showing the acoustical properties of the burn, including the Mach disk and the trailing Mach diamonds, all generated from the physics of the SpaceX CFD model and, as you can see, pretty faithfully mirroring the actual burn of a Space Shuttle engine shown on the upper left. The gases are supersonic and clear as they exit the nozzle, but they hit the shock wave behind the nozzle, slow down and compress, and turn white.

While the homegrown SpaceX CFD code is still under development, the obvious thing to do is to scale it out by having it span multiple GPUs and then across a cluster of systems.

“The code is currently running on a single machine now, but the near-term goal is to parallelize it,” Lichtl confirmed to The Platform after his presentation.

Lichtl said that SpaceX did not have to wait until Nvidia delivers its NVLink GPU clustering interconnect to hook GPUs to each other to scale up the CFD application and that SpaceX can cluster over the PCI-Express bus for now. (He added that “NVLink would be great,” and like many customers running GPU-accelerated simulations, wishes that NVLink was already here.) SpaceX is also looking at various interconnects to link GPUs and CPUs together and is also exploring the use of the MPI protocol to have server nodes work in parallel running the CFD code.

One possible interim option ahead of NVLink might be to deploy the SpaceX CFD software on clusters built using IBM’s Power8 processors and using InfiniBand network interface cards that are tightly and efficiently coupled to the processor through IBM’s Coherent Accelerator Processor Interface, or CAPI. This is the architecture that the US Department of Energy has chosen for two of its largest supercomputers, nicknamed Summit and Sierra, that will be delivered in 2017. Those systems, as The Platform has previously reported when divulging the OpenPower roadmap put together by IBM, Nvidia, and Mellanox Technologies, will also sport a second generation NVLink for linking GPUs together and possibly 200 Gb/sec InfiniBand instead of the 100 Gb/sec speed in the contract. The thing is that Mellanox is already today shipping 100 Gb/sec ConnectX-4 network interface cards that can speak CAPI to the Power8 chip, radically lowering latency compared to 56 Gb/sec InfiniBand and offering nearly twice the bandwidth, too. There are plenty of OpenPower system makers who would love to get the SpaceX business, if the company decides to go down this route.

One important thing, Lichtl continued with his shopping list, was to have server nodes with lots and lots of GPUs.

Music to Nvidia’s ears, no doubt.

Quelle: GPU

4126 Views