17.08.2022

Presenting data as sound can open new opportunities for accessibility, engagement, and discovery, but the technique still faces challenges.

There’s no sound in space. Or is there?

With a technique called sonification, astronomers can translate data — ranging from stars' brightness to the strength of gravitational waves — into sound. The technique most obviously, and vitally, makes such information accessible to those who are blind or have low vision. But sonification also opens up new experiences for the general public and researchers alike, Anita Zanella (National Institute of Astrophysics, Italy) and colleagues report in Nature Astronomy.

Humans are generally a visually oriented species, to the extent that we sometimes neglect our other senses. But those other faculties have capabilities that seeing does not. For example, while we may blink or look away from visual cues, we’re always processing audio input (even when we think we’re not). We’re also capable of listening to several things at once, and we’re especially good at filtering out noise to home in on what we want to understand.

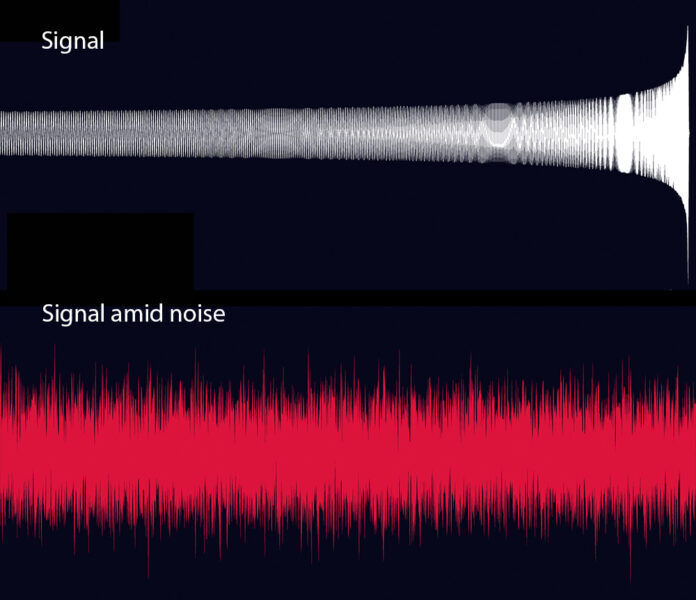

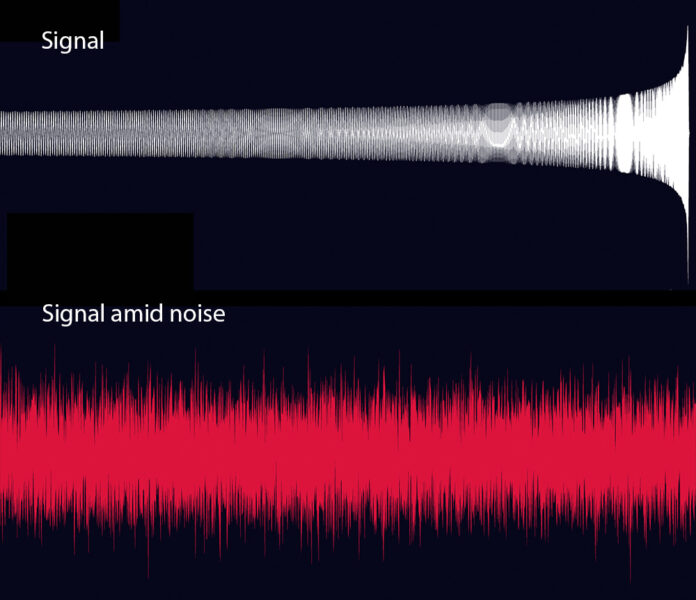

The latter is known as the cocktail party effect, meaning you can understand what your friend is saying even when others are talking loudly nearby. It’s not just a party trick — sonification can help us get at astrophysical signals hidden amid the noise.

Cardiff University’s Black Hole Hunter shows this effect in action. Click that link and you’ll see a simulated signal from two merging black holes swinging closer together until they become one in a final “chirp.” Then, you can try your hand at finding this signal buried in noise. Be warned: The noise is loud. Despite the roar, it’s surprisingly possible to make out the quiet chirp. (At least in Level One — the levels get progressively harder!)

Black Hole Hunter / Cardiff University

Note that Black Hole Hunter is an online game built for public engagement, not a research project. In fact, more sonification projects (36%) list public engagement as their primary objective than other aims. Other objectives include research (26%), art (17%), accessibility (13%), and education (8%), according to Zanella's team.

Even so, “data as sound” has been enabling scientific discovery for decades. Most notably, a radio hiss led to the discovery of the Big Bang’s afterglow, the cosmic microwave background, in 1964. The late space scientist Donald Gurnett (Iowa University) also spent decades translating space mission data into sound. A particularly eerie one, and the focus of some of Gurnett's research, is the lightning-induced plasma waves that “whistle” through Earth’s upper atmosphere. Other lightning-induced sounds recorded by plasma instruments aided in the discovery of Saturnian lightning.

Now, sonification is making data — and discovery —accessible to new populations. For example, a new project called Sensing the Dynamic Universe shows brightness changes over time for all sorts of celestial objects, including quasars, supernovae, and pulsating variable stars.

Cofounded by Paul Green and Wanda Diaz-Merced (both at Center for Astrophysics, Harvard & Smithsonian), this project is designed for outreach and education, with accessibility as a primary goal. (Diaz-Merced is herself a blind astronomer.)

The variable sources shown currently are curated to show the best examples of each type of object. “With luck and funding, I’d ideally like to expand it to allow users to see and hear lightcurves and spectra for objects of their choice,” Green says.

Center for Astrophysics, Harvard & Smithsonian

In addition to serving the blind and those with low vision, “data as sound” also works well for those with dyslexia, autism, and really anyone who prefers to listen to data, Zanella and colleagues note in their study.

An example of a project that combines accessibility with both public engagement and research is ARISA's Eclipse Soundscapes app. Since 2017, this app has provided sonic feedback to users as they explore a “rumble map” of a solar eclipse photo.

"[The rumble map] was amazingly useful for users who are blind and low vision, because it allowed them to build a mental map of what their sighted peers were seeing," says Henry Winter, the cofounder of ARISA and creator of the Eclipse Soundscapes app. "Anecdotally, we found that it deepened the experience for sighted users as well, as it engaged more senses.

While funding for the app has been cut, ARISA still plans to update it for the 2023 and 2024 eclipses. Since access to smartphones' haptic motors was released after the 2017 eclipse, ARISA will also be enhancing the rumble map.

Finally, ARISA is planning a separate citizen-science project, launching next year. It will enable anyone to record the reaction of the natural world — such as birdsong and crickets’ whirr — to the sudden darkening of a solar eclipse.

The examples given here are just some of many ongoing sonification efforts, which have been ramping up in recent years. Zanella and her colleagues rounded up such projects across astronomy, finding that the cumulative number has been increasing exponentially since 2010. As of December 2021, the number stands at 98.

But sonification is still very much a niche thing, they find. Part of the problem, the team acknowledges, is that there’s no standard approach to sonifying data. Anyone seeking to do so basically has to reinvent the wheel. To get to standardization, Zanella's team writes, we simply need more studies on how well different sonification techniques work.

And to do that we need more funding, says Winter. “Making meaningful sonification tools takes experienced designers, programmers, and scientists as well as conducting focus groups to ensure that the tools are usable and communicate the desired information,” he explains. “Finding grants that could potentially fund this type of work has been a challenge, but ARISA Lab has been fortunate to find partners in NASA and the National Science Foundation that believe in this work and want to ensure its success.”

Another problem is how to present error bars associated with data. Sonifying data uncertainty can be vital to distinguishing, say, whether a star is really getting brighter or whether we’re mistaking instrument noise and natural fluctuations for real change. One option, Green says, is to simply use louder volume for smaller errors, fainter for larger errors. But in many cases, it's difficult to add errors without confusing the listener.

There's a lot of work to do to make sonification a standard for astronomy, but the rewards will make the efforts worthwhile.

Quelle: Sky&Telescope